Open Access

Letter to the Editor

Warning: Artificial intelligence chatbots can generate inaccurate medical and scientific information and references

The use of generative artificial intelligence (AI) chatbots, such as ChatGPT and YouChat, has increased enormously since their release in late 2022. Concerns have been raised over the potential of c

[...] Read more.

The use of generative artificial intelligence (AI) chatbots, such as ChatGPT and YouChat, has increased enormously since their release in late 2022. Concerns have been raised over the potential of chatbots to facilitate cheating in education settings, including essay writing and exams. In addition, multiple publishers have updated their editorial policies to prohibit chatbot authorship on publications. This article highlights another potentially concerning issue; the strong propensity of chatbots in response to queries requesting medical and scientific information and its underlying references, to generate plausible looking but inaccurate responses, with the chatbots also generating nonexistent citations. As an example, a number of queries were generated and, using two popular chatbots, demonstrated that both generated inaccurate outputs. The authors thus urge extreme caution, because unwitting application of inconsistent and potentially inaccurate medical information could have adverse outcomes.

Catherine L. Clelland ... James D. Clelland

View:4967

Download:295

Times Cited: 0

The use of generative artificial intelligence (AI) chatbots, such as ChatGPT and YouChat, has increased enormously since their release in late 2022. Concerns have been raised over the potential of chatbots to facilitate cheating in education settings, including essay writing and exams. In addition, multiple publishers have updated their editorial policies to prohibit chatbot authorship on publications. This article highlights another potentially concerning issue; the strong propensity of chatbots in response to queries requesting medical and scientific information and its underlying references, to generate plausible looking but inaccurate responses, with the chatbots also generating nonexistent citations. As an example, a number of queries were generated and, using two popular chatbots, demonstrated that both generated inaccurate outputs. The authors thus urge extreme caution, because unwitting application of inconsistent and potentially inaccurate medical information could have adverse outcomes.

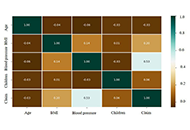

Use of responsible artificial intelligence to predict health insurance claims in the USA using machine learning algorithmsOpen AccessOriginal ArticleAim: This study investigates the potential of artificial intelligence (AI) in revolutionizing healthcare insurance claim processing in the USA. It aims to determine the most effective machine lea [...] Read more.Ashrafe Alam, Victor R. PrybutokPublished: February 28, 2024 Explor Digit Health Technol. 2024;2:30–45

Use of responsible artificial intelligence to predict health insurance claims in the USA using machine learning algorithmsOpen AccessOriginal ArticleAim: This study investigates the potential of artificial intelligence (AI) in revolutionizing healthcare insurance claim processing in the USA. It aims to determine the most effective machine lea [...] Read more.Ashrafe Alam, Victor R. PrybutokPublished: February 28, 2024 Explor Digit Health Technol. 2024;2:30–45 System for classifying antibody concentration against severe acute respiratory syndrome coronavirus 2 S1 spike antigen with automatic quick response generation for integration with health passportsOpen AccessOriginal ArticleAim: After the severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) pandemic and the realization of mass vaccination against the virus, the availability of a reliable, rapid, and easy-to- [...] Read more.Apostolos Apostolakis ... Spyridon KintziosPublished: February 28, 2024 Explor Digit Health Technol. 2024;2:20–29

System for classifying antibody concentration against severe acute respiratory syndrome coronavirus 2 S1 spike antigen with automatic quick response generation for integration with health passportsOpen AccessOriginal ArticleAim: After the severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) pandemic and the realization of mass vaccination against the virus, the availability of a reliable, rapid, and easy-to- [...] Read more.Apostolos Apostolakis ... Spyridon KintziosPublished: February 28, 2024 Explor Digit Health Technol. 2024;2:20–29 Functionality and feasibility of cognitive function training via mobile health application among youth at risk for psychosisOpen AccessOriginal ArticleAim: Mobile health applications (MHAs) have been rapidly designed and urgently need evaluation. Existing evaluation methods, such as platform, development, and subjective overall user observation [...] Read more.Huijun Li ... Jijun WangPublished: February 27, 2024 Explor Digit Health Technol. 2024;2:7–19

Functionality and feasibility of cognitive function training via mobile health application among youth at risk for psychosisOpen AccessOriginal ArticleAim: Mobile health applications (MHAs) have been rapidly designed and urgently need evaluation. Existing evaluation methods, such as platform, development, and subjective overall user observation [...] Read more.Huijun Li ... Jijun WangPublished: February 27, 2024 Explor Digit Health Technol. 2024;2:7–19 Warning: Artificial intelligence chatbots can generate inaccurate medical and scientific information and referencesOpen AccessLetter to the EditorThe use of generative artificial intelligence (AI) chatbots, such as ChatGPT and YouChat, has increased enormously since their release in late 2022. Concerns have been raised over the potential of c [...] Read more.Catherine L. Clelland ... James D. ClellandPublished: January 10, 2024 Explor Digit Health Technol. 2024;2:1–6

Warning: Artificial intelligence chatbots can generate inaccurate medical and scientific information and referencesOpen AccessLetter to the EditorThe use of generative artificial intelligence (AI) chatbots, such as ChatGPT and YouChat, has increased enormously since their release in late 2022. Concerns have been raised over the potential of c [...] Read more.Catherine L. Clelland ... James D. ClellandPublished: January 10, 2024 Explor Digit Health Technol. 2024;2:1–6